Long before AI-generated content, accuracy mattered.

And it remains essential, especially when you create content to build trust with your audience.

Whether you’re editing AI-created content, writing original articles, or fact-checking a podcast script, this three-part checklist will help make sure you’re not spreading misinformation and breaking your audience’s trust. The sections cover:

- Checking original sources

- Statistics and research-based information

- Third-party sources

Check your content for accurate details on original sources

Identify every original source included in the content – most will be people. (Original sources include anyone you’ve interviewed and information from your company.)

These questions help assess the person’s veracity.

Does the source exist? Are their identification details accurate?

Some people falsely represent themselves or their companies. They inflate their title, what they do, or their background. Go to their company’s website to see if they’re on the team. If they’re not listed, call the company to verify.

Is the source’s name spelled correctly? Is it spelled correctly in every place?

In journalism school, you’d fail an assignment if you misspelled a name. I pay close attention to the first identification. But sometimes, near the end of the article, I unconsciously start misspelling it. Check every place the name appears.

Is the person’s title accurate?

LinkedIn and other social media profiles and bios on company websites can be good resources for verifying titles. If the title you find on one of these resources differs from what’s included in the source’s email signature, resolve the conflicting information.

Is the company name spelled correctly?

If the company name includes unusual lowercase letters or spacing, make a note in the file so no one changes it later in the production process.

Are the proper pronouns used?

Does the person use he/him, she/her, or they/them? Don’t assume. Incorporate the preferred pronoun question when you ask them to provide their identification details.

When #factchecking, don’t make assumptions about a source’s pronouns. Ask what they prefer, says @AnnGynn via @CMIContent. Click To TweetDid you get the quote or information directly from the person cited?

Often a PR rep or other comms person may email a quote or answer to your questions. If you haven’t worked with that person, verify the quote with the person it’s attributed to. Ask the PR person for the contact info (or to make an introduction by email so the person is more likely to respond). Verify directly by email or phone.

(Note: Verifying a quote from another site relates more to the publishing site’s credibility, which I’ll cover in the next section.)

TIP: If you ever doubt whether the quote comes from a real person, ask them to call you. I did this the other day. The person responded by email but never called. I deleted their information from the article. A phone call isn’t a fail-safe option, but it helps.

Is a source cited for each statistic or other data?

Every piece of data-backed information should cite the original source. If it doesn’t, you shouldn’t use it.

Is the cited source the original source?

Way too often, the cited source is five steps removed from the native source or, worse, 10 steps removed from a bad link or source.

Don’t cite a stat cited by another blog that cited a mega infographic that didn’t include the original source.

Is the number accurately represented?

This rabbit-hole fact-finding also often reveals misinterpreted data – like the child’s game of telephone. With each reuse, the information is tweaked just enough or so outdated that it’s no longer accurate.

For example, 45 Blogging Statistics and Facts To Know in 2023 includes a statistic that says 77% of people regularly read blogs. The article cited (and linked) to an undated article on Impact as the source. Impact cites The West Program as the source and links to an article I couldn’t access because my security software identified it as malicious.

So I searched for the stat and the cited source. Interestingly, it didn’t return anything from The West Program, but it does show many articles referencing the stat and attributing it to The West Program. In just the first results, I can see an article from 2013 quoting the stat.

So even if the stat was valid at some point, it’s still at least 10 years old. Yet, it appears in an article about blog stats for 2023.

But accurate representation mistakes aren’t limited to sloppy citations. They can happen when using the native source of the data too.

For example, an article about what salespeople do with content marketing that quotes CMI’s annual research would not be accurate. Marketers, not salespeople, take that survey. Their opinions may or may not be the same as sales teams.

Or consider this real-life example of misinterpreted data. Someone at NASA wrote a poorly worded release, using this statement: “Researchers confirmed an exoplanet, a planet that orbits another star, using NASA’s James Webb Space Telescope for the first time.”

Many reporters wrote that it was the first time an exoplanet had been discovered. In truth, that happened years earlier. The news was that it was the first time the Webb telescope saw it. Those inaccurate stories made their way into Google’s AI tool Bard, which generated an article about the telescope that included the incorrect fact. Even worse? Google promoted the article in Bard’s debut announcement.

Does the research meet professional standards?

You don’t need to be an expert in qualitative or quantitative research to weed out misinterpreted research. Review who was surveyed or what was analyzed. Look at the questions asked.

Check the sample size, which you’ll usually find in the executive summary or a labeled paragraph in the introduction or at the end of the research.

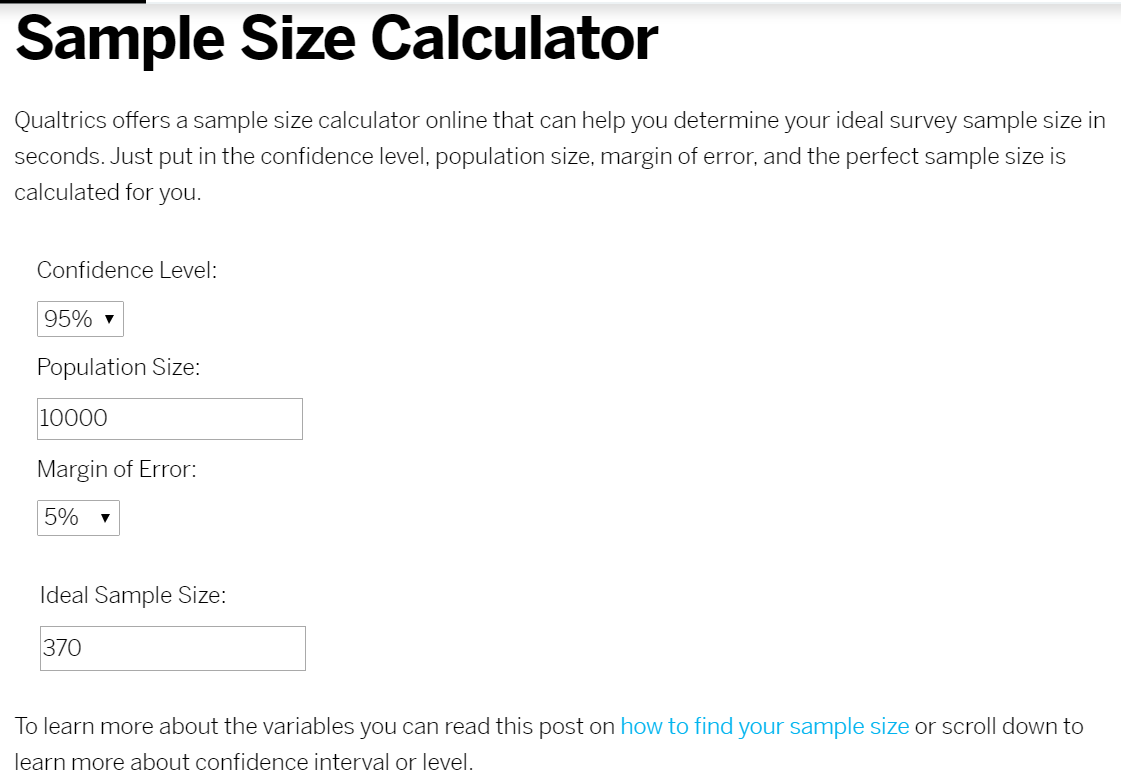

Why does sample size matter? Because a survey of, say, 100 music enthusiasts is insufficient to accurately predict the opinion of a group of 10,000 music enthusiasts. The XM Blog from Qualtrics, an experience management company, offers resources and explanations for assessing research quality, including the sample calculator tool shown below.

TIP: Confidence level and margin error are correlated. In the case illustrated above, a survey of 370 people accurately represents a population of 10,000 at a 95% confidence level. That means the results would be accurate within plus or minus five percentage points of the larger group.

When the original content overstates the data, you don’t necessarily need to delete it. Simply reframe it accurately. Take our music enthusiasts. It’s not OK to infer that they speak for a whole population, but it is OK to say, “10 out of 100 music enthusiasts surveyed said they like to attend jazz concerts.” It may lessen the impact, but it strengthens the audience’s trust.

Check the accuracy of information from third-party sources

Make sure the facts mentioned in your content are accurate, whether in opinion pieces, a source’s comment, or picked up from a third-party source. (You don’t want to perpetuate a myth or false statement even if you attribute it to another source or site.)

Is the information accurate?

Depending on the information, you can use several sources to verify it:

- Google search: Put a quote or information in the search bar – use direct quotes to see if the same information has been published elsewhere. Then move on to Moz’s Link Explorer tool to check the domain authority score (see below) to evaluate the site’s authenticity.

- Google Scholar: This site is an excellent resource for verifying information through scholarly journals, textbook publishing companies, etc.

- FactCheck.org: Check public policy and politically shared claims. A project of the Annenberg Public Policy Center of the University of Pennsylvania, the site allows visitors to ask verification questions directly. It created this video to help people spot bogus claims. And it’s even more relevant now than it was when published eight years ago.

.

Check the accuracy of public policy and politically shared claims in your #content with resources like @FactCheckDotOrg, says @AnnGynn via @CMIContent. Click To Tweet

Is the information from reputable sources?

Try these resources to help you assess the quality of information from external sites.

- Moz Link Explorer: You might already use Moz’s domain authority score in your SEO backlink strategy. You also can use it for this process. Check a site’s domain authority score to understand how trusted and reputable it is.

- Plagiarism Detector: Whether it’s written by one of your brand’s authors or comes from a freelancer, contributor, or other source, make sure the text hasn’t been copied intentionally or unintentionally from another work. This free version of Plagiarism Detector can be a good start. Paid tools also are available. Some programs, such as Microsoft Word, have plagiarism checkers built in.

Don’t forget to check author bio and proofreading details

Check the details in the author’s bio, too. After all, if the author isn’t accurately represented, your audience is far less likely to trust any information provided in the content.

Now that you’ve gone through the factual and contextual accuracy checklist, your content is ready for a different accuracy checklist – editing and proofreading for spelling, grammar, style, working links, and overall understanding.

HANDPICKED RELATED CONTENT:

Updated from a March 2020 story.

The author selected all tools mentioned in this article. If you’d like to suggest a fact-checking tool, please add it in the comments.

Cover image by Joseph Kalinowski/Content Marketing Institute